Do you want leads without spending $30+ a month? Here's my free alternative.

python scraping and web browsing

I'm serious, in this brief but useful run-down I am going to show you what I've done for job searches and lead generation that have saved me many hours. It's quick and easy, and doesn't take a lot of code, but you will need to get your hands dirty to find which websites* are going to be lead generators.

*I'm specifically looking for unresponsive and outdated websites

So, what do we do first? Well, initially I'll have to assume that you...

- know a little bit of python

- can run a python program (I love Jupyter notebooks)

- can install all necessary libraries

Part 1 - Ok, let's get to it.

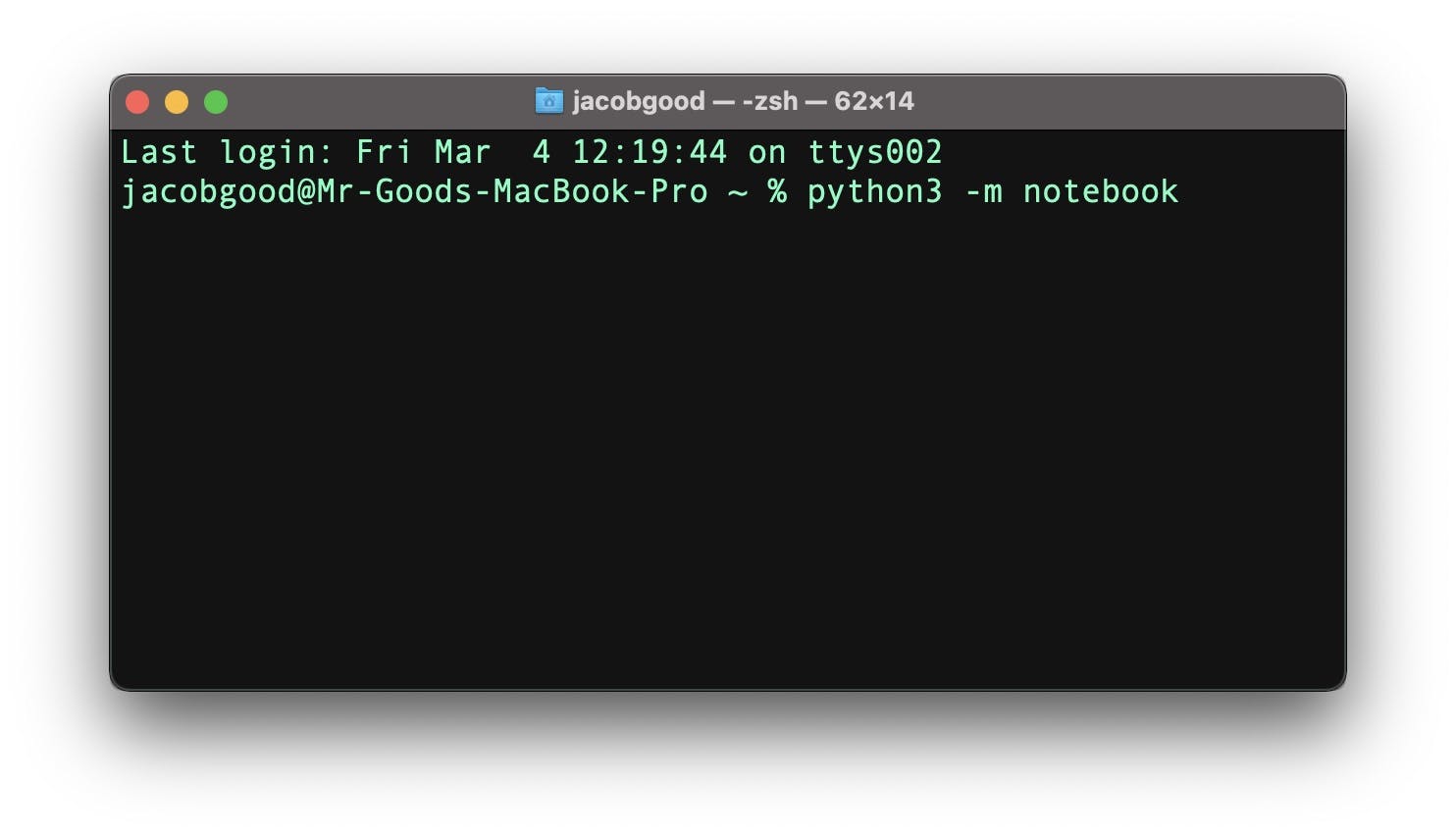

First, let's open up a Jupyter notebook from the command linec

Easy enough. Now, navigate to a directory and folder that you want to use for this small project. Save it and then open up a new notebook.

Easy enough. Now, navigate to a directory and folder that you want to use for this small project. Save it and then open up a new notebook.

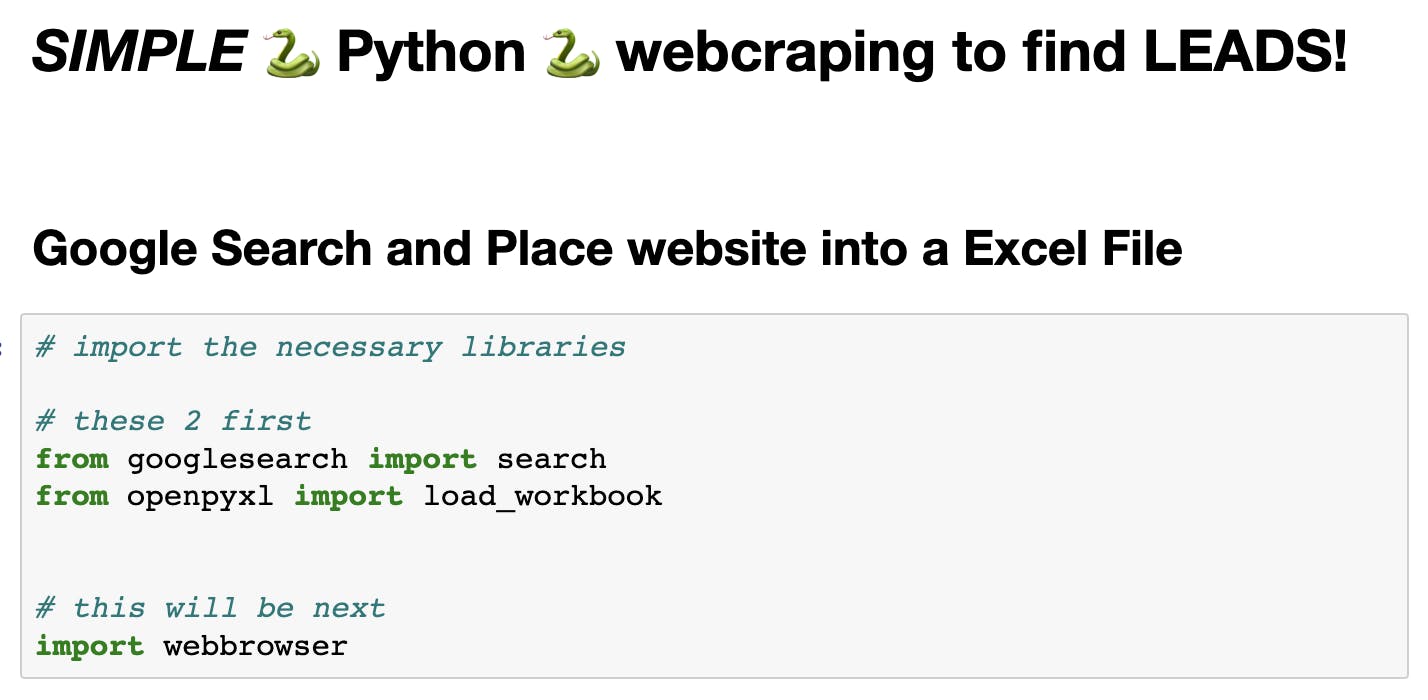

Part 2 - Import all the necessary libraries

Part 3 - Create a New Excel Spreadsheet

I choose to do this because opening up Excel takes a few seconds. This way, Excel is already running and writing to it is faster. Be sure to save in the SAME FOLDER as your Jupyter notebook.

I choose to do this because opening up Excel takes a few seconds. This way, Excel is already running and writing to it is faster. Be sure to save in the SAME FOLDER as your Jupyter notebook.

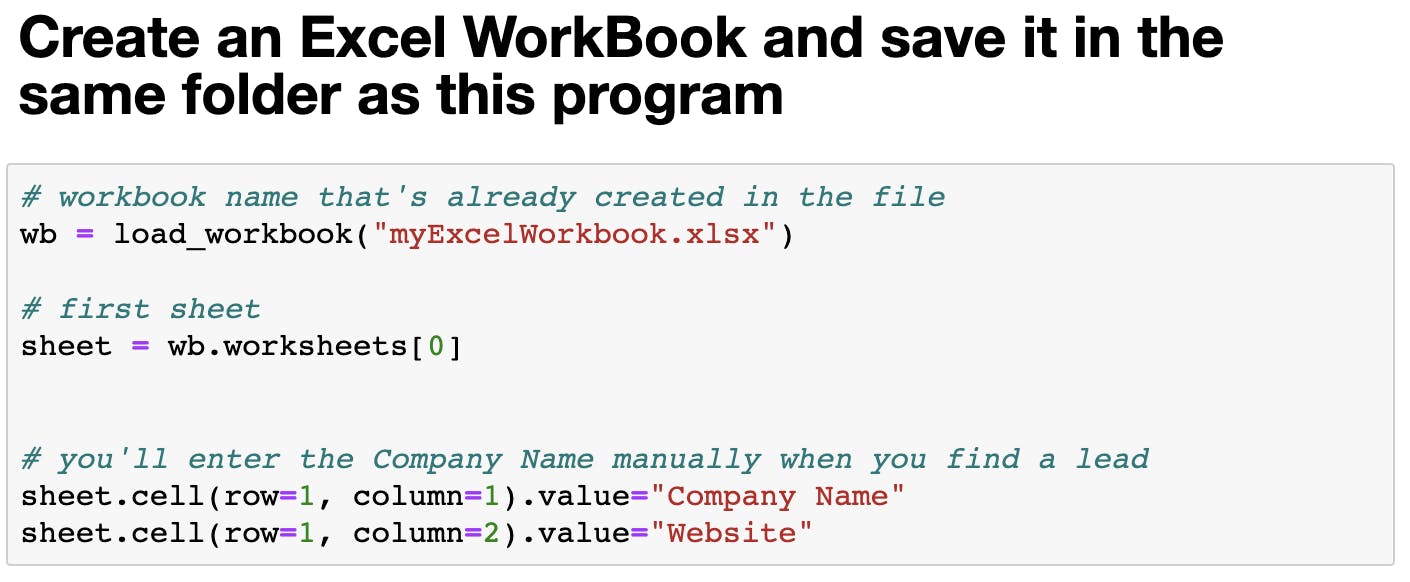

Part 4 - Go back to Jupyter Notebooks and target that Excel

Here is the following code that will load the workbook, target the sheet, and then target the cells to add "Company Name" and "Website" as headers to the Excel spreadsheet.

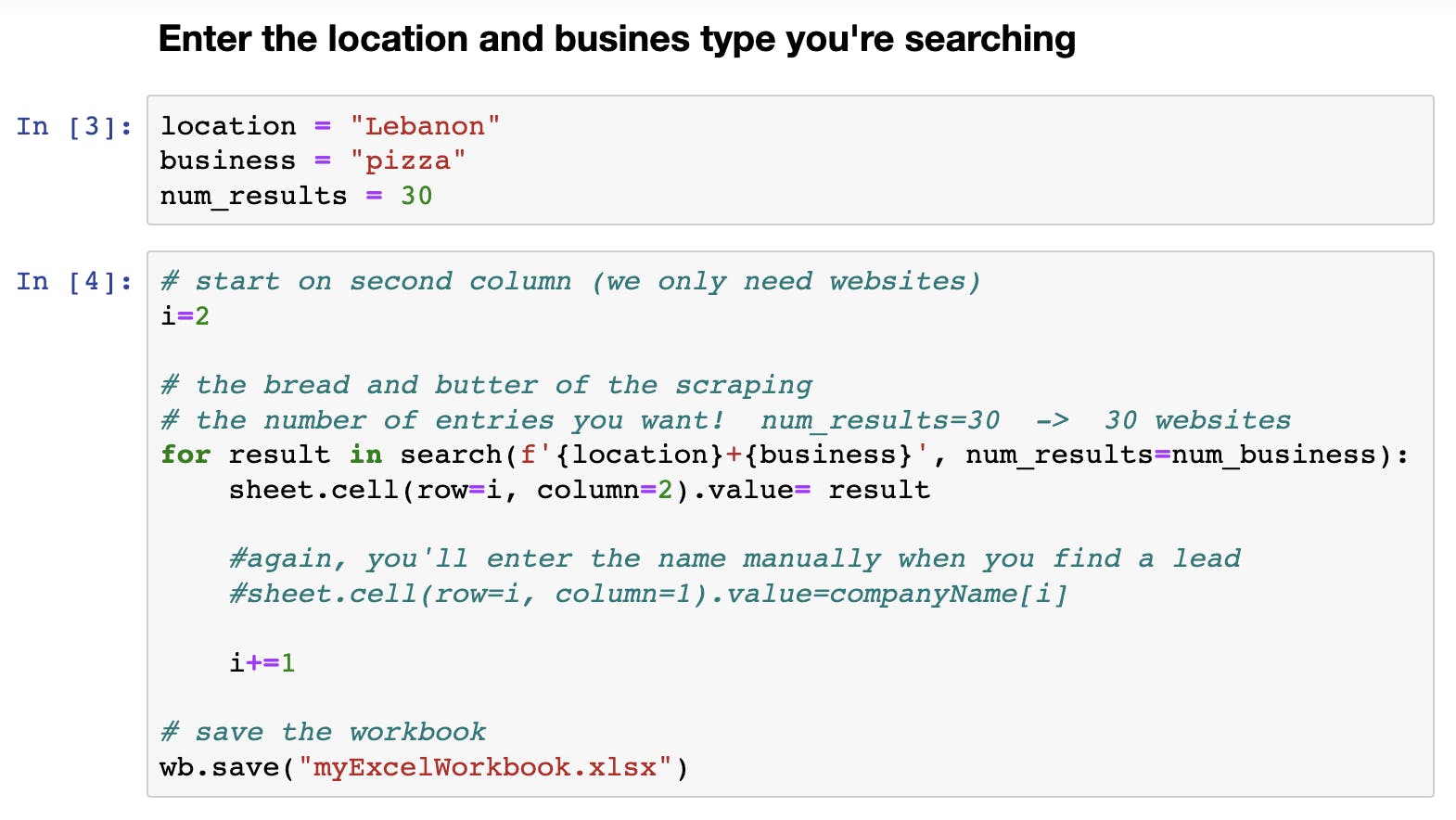

Now, once you have that all set up you'll need to enter a new location and business to search your chosen area AND the chosen business. I chose "Lebanon" and "Pizza."

Part 5 - Set your desired Location, Business, Number of Results and RUN!

When you're doing this, be sure that you set the num_results equal to something that is manageable. Sure, you can set it to 5000 and the program would have no qualms...

HOWEVER...

You're going to be doing too much work afterwards. I'll explain...

What's the scraper doing? (2 simple things)

It performs a Google search with your location and business inputs (feel free to add more if you want!) copies the website address, and then pastes it into the excel file. Done and dusted. Simple. I like 30 websites.

But now! We have to check all these website for responsiveness.

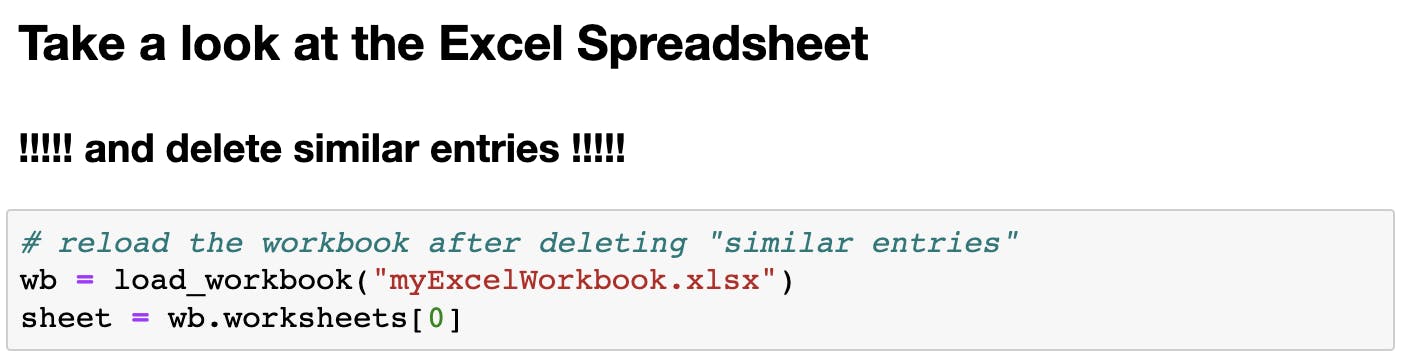

Part 6 - Delete similar entries

Take a look at the spreadsheet and delete any similar entries. Sometimes it will return

We don't want that. Delete the extras. It's not too often that it happens, but it can.

Part 7 - after deleting, reload the workbook

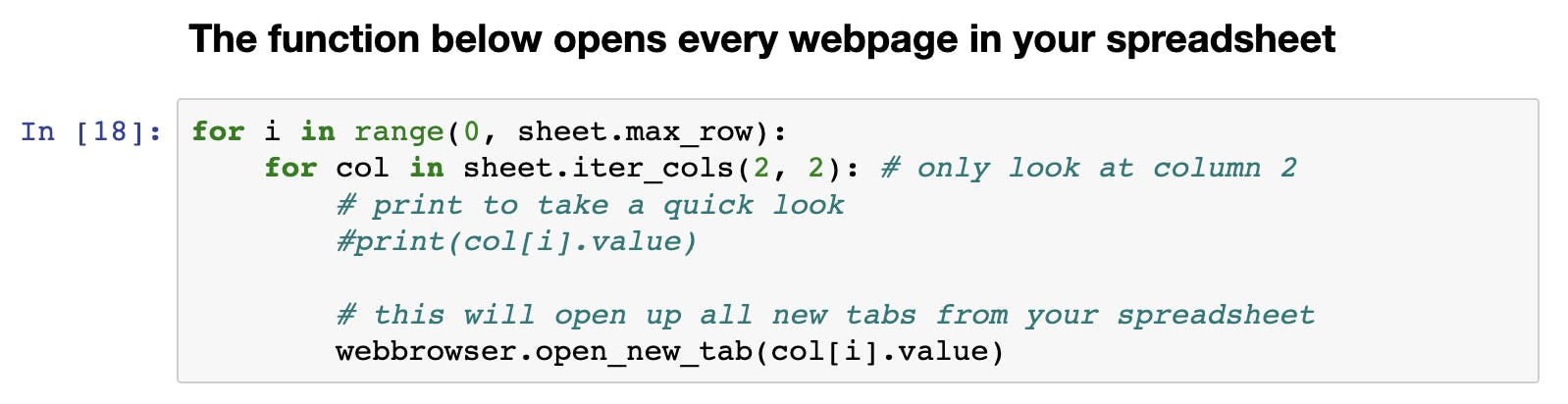

Part 8 - Run the browser manipulation function to open up all those web pages

30 is manageable. 5000 might crash your computer. Or just be a headache.

This just opens up every page from the excel file.

That's it!

Now, all you have to do is flip through the pages (quick) And mark down whether or not the site is responsive (easy)

Now you have your leads. My first run led to...

30 websites -> 8 leads -> 8 cold calls -> 2-3 possible customers.

All-in-all, (after writing the code) it saved me about 1 minute per website. If I shoot for 500 websites, that's a significant chunk of time saved.

Hope you enjoyed!

Sources: header picture: antevenio.com/usa/what-is-web-scraping-and-..